The Windows Container Nightmare: Massive Image Sizes and Timeouts!

Frustration, annoyance, and a lot of wasted time – that’s what comes to mind when dealing with Microsoft Windows. As a developer, you’re always looking for the best tools to get the job done efficiently, and Windows can make that a real challenge. This time Google is also to blame.

Take, for example, a VStools Windows Docker image that should have everything you need to compile your project – the compiler, linker, and nmake. Sounds perfect, right? Wrong. It turns out that the image doesn’t have two crucial components – msbuild.exe and devenv.exe – which are required to compile .sln files.

So what do you do? You start doing research, trying to figure out how to install the Community Edition of VS 2019 and VS 2022. Finally, after much effort, you manage to get it to build. But then you run into another problem – image sizes.

Each image is a whopping 86GB, and you need to push it to a remote registry, in our case, the Google Cloud Registry (GCR). That shouldn’t be a problem, right? It’s just storage space. But it turns out that it’s not that simple. The GCR timeout for a single layer image is only two hours, and my internet connection can do a maximum of 5MBps. In two hours, that means I can only push about 35.15GB. That’s definitely much lower than the 86GB image size, so it shouldn’t work. But here’s the kicker – the 86GB image is compressed to about 40GB, which is just 5GB more than the limit. Docker retries pushes if it fails, so it ends up attempting to push the same images quite a few times.

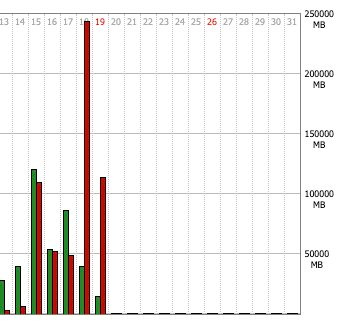

Over the last three days, my system has attempted to push two compressed 86GB images quite a few times. According to my DD-wrt logs, my system has attempted to push 450GB of data…. UNSUCCESSFULLY!

PS: I did of course get it to work. We are #devops after all.

#cloud #windows #gcr #googlecloud

Comments are closed.